mirror of

https://github.com/ManInDark/bootc-image.git

synced 2026-01-20 14:34:28 +01:00

FEAT: customize everything

This commit is contained in:

11

.github/dependabot.yml

vendored

11

.github/dependabot.yml

vendored

@@ -1,11 +0,0 @@

|

||||

# To get started with Dependabot version updates, you'll need to specify which

|

||||

# package ecosystems to update and where the package manifests are located.

|

||||

# Please see the documentation for all configuration options:

|

||||

# https://docs.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

|

||||

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

21

.github/renovate.json5

vendored

21

.github/renovate.json5

vendored

@@ -1,21 +0,0 @@

|

||||

{

|

||||

"$schema": "https://docs.renovatebot.com/renovate-schema.json",

|

||||

"extends": [

|

||||

"config:best-practices",

|

||||

],

|

||||

|

||||

"rebaseWhen": "never",

|

||||

|

||||

"packageRules": [

|

||||

{

|

||||

"automerge": true,

|

||||

"matchUpdateTypes": ["pin", "pinDigest"]

|

||||

},

|

||||

{

|

||||

"enabled": false,

|

||||

"matchUpdateTypes": ["digest", "pinDigest", "pin"],

|

||||

"matchDepTypes": ["container"],

|

||||

"matchFileNames": [".github/workflows/**.yaml", ".github/workflows/**.yml"],

|

||||

},

|

||||

]

|

||||

}

|

||||

23

.github/workflows/build-disk.yml

vendored

23

.github/workflows/build-disk.yml

vendored

@@ -4,11 +4,6 @@ name: Build disk images

|

||||

on:

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

upload-to-s3:

|

||||

description: "Upload to S3"

|

||||

required: false

|

||||

default: false

|

||||

type: boolean

|

||||

platform:

|

||||

required: true

|

||||

type: choice

|

||||

@@ -89,7 +84,7 @@ jobs:

|

||||

additional-args: --use-librepo=True

|

||||

|

||||

- name: Upload disk images and Checksum to Job Artifacts

|

||||

if: inputs.upload-to-s3 != true && github.event_name != 'pull_request'

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: actions/upload-artifact@ea165f8d65b6e75b540449e92b4886f43607fa02 # v4

|

||||

with:

|

||||

path: ${{ steps.build.outputs.output-directory }}

|

||||

@@ -97,19 +92,3 @@ jobs:

|

||||

retention-days: 0

|

||||

compression-level: 0

|

||||

overwrite: true

|

||||

|

||||

- name: Upload to S3

|

||||

if: inputs.upload-to-s3 == true && github.event_name != 'pull_request'

|

||||

shell: bash

|

||||

env:

|

||||

RCLONE_CONFIG_S3_TYPE: s3

|

||||

RCLONE_CONFIG_S3_PROVIDER: ${{ secrets.S3_PROVIDER }}

|

||||

RCLONE_CONFIG_S3_ACCESS_KEY_ID: ${{ secrets.S3_ACCESS_KEY_ID }}

|

||||

RCLONE_CONFIG_S3_SECRET_ACCESS_KEY: ${{ secrets.S3_SECRET_ACCESS_KEY }}

|

||||

RCLONE_CONFIG_S3_REGION: ${{ secrets.S3_REGION }}

|

||||

RCLONE_CONFIG_S3_ENDPOINT: ${{ secrets.S3_ENDPOINT }}

|

||||

SOURCE_DIR: ${{ steps.build.outputs.output-directory }}

|

||||

run: |

|

||||

sudo apt-get update

|

||||

sudo apt-get install -y rclone

|

||||

rclone copy $SOURCE_DIR S3:${{ secrets.S3_BUCKET_NAME }}

|

||||

96

.github/workflows/build.yml

vendored

96

.github/workflows/build.yml

vendored

@@ -5,7 +5,7 @@ on:

|

||||

branches:

|

||||

- main

|

||||

schedule:

|

||||

- cron: '05 10 * * *' # 10:05am UTC everyday

|

||||

- cron: '30 1 * * *'

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

@@ -14,11 +14,10 @@ on:

|

||||

workflow_dispatch:

|

||||

|

||||

env:

|

||||

IMAGE_DESC: "My Customized Universal Blue Image"

|

||||

IMAGE_KEYWORDS: "bootc,ublue,universal-blue"

|

||||

IMAGE_LOGO_URL: "https://avatars.githubusercontent.com/u/120078124?s=200&v=4" # Put your own image here for a fancy profile on https://artifacthub.io/!

|

||||

IMAGE_NAME: "${{ github.event.repository.name }}" # output image name, usually same as repo name

|

||||

IMAGE_REGISTRY: "ghcr.io/${{ github.repository_owner }}" # do not edit

|

||||

IMAGE_DESC: "Customized bootc Image"

|

||||

IMAGE_KEYWORDS: "bootc"

|

||||

IMAGE_NAME: "${{ github.event.repository.name }}"

|

||||

IMAGE_REGISTRY: "ghcr.io/${{ github.repository_owner }}"

|

||||

DEFAULT_TAG: "latest"

|

||||

|

||||

concurrency:

|

||||

@@ -42,23 +41,17 @@ jobs:

|

||||

echo "IMAGE_REGISTRY=${IMAGE_REGISTRY,,}" >> ${GITHUB_ENV}

|

||||

echo "IMAGE_NAME=${IMAGE_NAME,,}" >> ${GITHUB_ENV}

|

||||

|

||||

# These stage versions are pinned by https://github.com/renovatebot/renovate

|

||||

- name: Checkout

|

||||

uses: actions/checkout@08c6903cd8c0fde910a37f88322edcfb5dd907a8 # v5

|

||||

|

||||

# You only use this action if the container-storage-action proves to be unreliable, you don't need to enable both

|

||||

# This is optional, but if you see that your builds are way too big for the runners, you can enable this by uncommenting the following lines:

|

||||

# - name: Maximize build space

|

||||

# uses: ublue-os/remove-unwanted-software@695eb75bc387dbcd9685a8e72d23439d8686cba6

|

||||

# with:

|

||||

# remove-codeql: true

|

||||

- name: Maximize build space

|

||||

uses: ublue-os/remove-unwanted-software@695eb75bc387dbcd9685a8e72d23439d8686cba6

|

||||

with:

|

||||

remove-codeql: true

|

||||

|

||||

- name: Mount BTRFS for podman storage

|

||||

id: container-storage-action

|

||||

uses: ublue-os/container-storage-action@911baca08baf30c8654933e9e9723cb399892140

|

||||

|

||||

# Fallback to the remove-unwanted-software-action if github doesn't allocate enough space

|

||||

# See: https://github.com/ublue-os/container-storage-action/pull/11

|

||||

continue-on-error: true

|

||||

with:

|

||||

target-dir: /var/lib/containers

|

||||

@@ -67,20 +60,12 @@ jobs:

|

||||

- name: Get current date

|

||||

id: date

|

||||

run: |

|

||||

# This generates a timestamp like what is defined on the ArtifactHub documentation

|

||||

# E.G: 2022-02-08T15:38:15Z'

|

||||

# https://artifacthub.io/docs/topics/repositories/container-images/

|

||||

# https://linux.die.net/man/1/date

|

||||

echo "date=$(date -u +%Y\-%m\-%d\T%H\:%M\:%S\Z)" >> $GITHUB_OUTPUT

|

||||

|

||||

# Image metadata for https://artifacthub.io/ - This is optional but is highly recommended so we all can get a index of all the custom images

|

||||

# The metadata by itself is not going to do anything, you choose if you want your image to be on ArtifactHub or not.

|

||||

- name: Image Metadata

|

||||

uses: docker/metadata-action@c1e51972afc2121e065aed6d45c65596fe445f3f # v5

|

||||

id: metadata

|

||||

with:

|

||||

# This generates all the tags for your image, you can add custom tags here too!

|

||||

# Default tags are "$DEFAULT_TAG" and "$DEFAULT_TAG.$date".

|

||||

tags: |

|

||||

type=raw,value=${{ env.DEFAULT_TAG }}

|

||||

type=raw,value=${{ env.DEFAULT_TAG }}.{{date 'YYYYMMDD'}}

|

||||

@@ -112,39 +97,30 @@ jobs:

|

||||

with:

|

||||

containerfiles: |

|

||||

./Containerfile

|

||||

# Postfix image name with -custom to make it a little more descriptive

|

||||

# Syntax: https://docs.github.com/en/actions/learn-github-actions/expressions#format

|

||||

image: ${{ env.IMAGE_NAME }}

|

||||

tags: ${{ steps.metadata.outputs.tags }}

|

||||

labels: ${{ steps.metadata.outputs.labels }}

|

||||

oci: false

|

||||

|

||||

# Rechunk is a script that we use on Universal Blue to make sure there isnt a single huge layer when your image gets published.

|

||||

# This does not make your image faster to download, just provides better resumability and fixes a few errors.

|

||||

# Documentation for Rechunk is provided on their github repository at https://github.com/hhd-dev/rechunk

|

||||

# You can enable it by uncommenting the following lines:

|

||||

# - name: Run Rechunker

|

||||

# id: rechunk

|

||||

# uses: hhd-dev/rechunk@f153348d8100c1f504dec435460a0d7baf11a9d2 # v1.1.1

|

||||

# with:

|

||||

# rechunk: 'ghcr.io/hhd-dev/rechunk:v1.0.1'

|

||||

# ref: "localhost/${{ env.IMAGE_NAME }}:${{ env.DEFAULT_TAG }}"

|

||||

# prev-ref: "${{ env.IMAGE_REGISTRY }}/${{ env.IMAGE_NAME }}:${{ env.DEFAULT_TAG }}"

|

||||

# skip_compression: true

|

||||

# version: ${{ env.CENTOS_VERSION }}

|

||||

# labels: ${{ steps.metadata.outputs.labels }} # Rechunk strips out all the labels during build, this needs to be reapplied here with newline separator

|

||||

- name: Run Rechunker

|

||||

id: rechunk

|

||||

uses: hhd-dev/rechunk@f153348d8100c1f504dec435460a0d7baf11a9d2 # v1.1.1

|

||||

with:

|

||||

rechunk: 'ghcr.io/hhd-dev/rechunk:v1.0.1'

|

||||

ref: "localhost/${{ env.IMAGE_NAME }}:${{ env.DEFAULT_TAG }}"

|

||||

prev-ref: "${{ env.IMAGE_REGISTRY }}/${{ env.IMAGE_NAME }}:${{ env.DEFAULT_TAG }}"

|

||||

skip_compression: true

|

||||

version: ${{ env.CENTOS_VERSION }}

|

||||

labels: ${{ steps.metadata.outputs.labels }}

|

||||

|

||||

# This is necessary so that the podman socket can find the rechunked image on its storage

|

||||

# - name: Load in podman and tag

|

||||

# run: |

|

||||

# IMAGE=$(podman pull ${{ steps.rechunk.outputs.ref }})

|

||||

# sudo rm -rf ${{ steps.rechunk.outputs.output }}

|

||||

# for tag in ${{ steps.metadata.outputs.tags }}; do

|

||||

# podman tag $IMAGE ${{ env.IMAGE_NAME }}:$tag

|

||||

# done

|

||||

- name: Load in podman and tag

|

||||

run: |

|

||||

IMAGE=$(podman pull ${{ steps.rechunk.outputs.ref }})

|

||||

sudo rm -rf ${{ steps.rechunk.outputs.output }}

|

||||

for tag in ${{ steps.metadata.outputs.tags }}; do

|

||||

podman tag $IMAGE ${{ env.IMAGE_NAME }}:$tag

|

||||

done

|

||||

|

||||

# These `if` statements are so that pull requests for your custom images do not make it publish any packages under your name without you knowing

|

||||

# They also check if the runner is on the default branch so that things like the merge queue (if you enable it), are going to work

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@5e57cd118135c172c3672efd75eb46360885c0ef # v3

|

||||

if: github.event_name != 'pull_request' && github.ref == format('refs/heads/{0}', github.event.repository.default_branch)

|

||||

@@ -166,23 +142,3 @@ jobs:

|

||||

tags: ${{ steps.metadata.outputs.tags }}

|

||||

username: ${{ env.REGISTRY_USER }}

|

||||

password: ${{ env.REGISTRY_PASSWORD }}

|

||||

|

||||

# This section is optional and only needs to be enabled if you plan on distributing

|

||||

# your project for others to consume. You will need to create a public and private key

|

||||

# using Cosign and save the private key as a repository secret in Github for this workflow

|

||||

# to consume. For more details, review the image signing section of the README.

|

||||

- name: Install Cosign

|

||||

uses: sigstore/cosign-installer@d7543c93d881b35a8faa02e8e3605f69b7a1ce62 # v3.10.0

|

||||

if: github.event_name != 'pull_request' && github.ref == format('refs/heads/{0}', github.event.repository.default_branch)

|

||||

|

||||

- name: Sign container image

|

||||

if: github.event_name != 'pull_request' && github.ref == format('refs/heads/{0}', github.event.repository.default_branch)

|

||||

run: |

|

||||

IMAGE_FULL="${{ env.IMAGE_REGISTRY }}/${{ env.IMAGE_NAME }}"

|

||||

for tag in ${{ steps.metadata.outputs.tags }}; do

|

||||

cosign sign -y --key env://COSIGN_PRIVATE_KEY $IMAGE_FULL:$tag

|

||||

done

|

||||

env:

|

||||

TAGS: ${{ steps.push.outputs.digest }}

|

||||

COSIGN_EXPERIMENTAL: false

|

||||

COSIGN_PRIVATE_KEY: ${{ secrets.SIGNING_SECRET }}

|

||||

|

||||

@@ -1,33 +1,9 @@

|

||||

# Allow build scripts to be referenced without being copied into the final image

|

||||

FROM scratch AS ctx

|

||||

COPY build_files /

|

||||

|

||||

# Base Image

|

||||

FROM ghcr.io/ublue-os/bazzite:stable

|

||||

FROM quay.io/fedora/fedora-bootc:44

|

||||

|

||||

## Other possible base images include:

|

||||

# FROM ghcr.io/ublue-os/bazzite:latest

|

||||

# FROM ghcr.io/ublue-os/bluefin-nvidia:stable

|

||||

#

|

||||

# ... and so on, here are more base images

|

||||

# Universal Blue Images: https://github.com/orgs/ublue-os/packages

|

||||

# Fedora base image: quay.io/fedora/fedora-bootc:41

|

||||

# CentOS base images: quay.io/centos-bootc/centos-bootc:stream10

|

||||

|

||||

### [IM]MUTABLE /opt

|

||||

## Some bootable images, like Fedora, have /opt symlinked to /var/opt, in order to

|

||||

## make it mutable/writable for users. However, some packages write files to this directory,

|

||||

## thus its contents might be wiped out when bootc deploys an image, making it troublesome for

|

||||

## some packages. Eg, google-chrome, docker-desktop.

|

||||

##

|

||||

## Uncomment the following line if one desires to make /opt immutable and be able to be used

|

||||

## by the package manager.

|

||||

|

||||

# RUN rm /opt && mkdir /opt

|

||||

|

||||

### MODIFICATIONS

|

||||

## make modifications desired in your image and install packages by modifying the build.sh script

|

||||

## the following RUN directive does all the things required to run "build.sh" as recommended.

|

||||

RUN rm -r /opt && mkdir /opt

|

||||

|

||||

RUN --mount=type=bind,from=ctx,source=/,target=/ctx \

|

||||

--mount=type=cache,dst=/var/cache \

|

||||

@@ -35,6 +11,4 @@ RUN --mount=type=bind,from=ctx,source=/,target=/ctx \

|

||||

--mount=type=tmpfs,dst=/tmp \

|

||||

/ctx/build.sh

|

||||

|

||||

### LINTING

|

||||

## Verify final image and contents are correct.

|

||||

RUN bootc container lint

|

||||

|

||||

319

Justfile

319

Justfile

@@ -1,319 +0,0 @@

|

||||

export image_name := env("IMAGE_NAME", "image-template") # output image name, usually same as repo name, change as needed

|

||||

export default_tag := env("DEFAULT_TAG", "latest")

|

||||

export bib_image := env("BIB_IMAGE", "quay.io/centos-bootc/bootc-image-builder:latest")

|

||||

|

||||

alias build-vm := build-qcow2

|

||||

alias rebuild-vm := rebuild-qcow2

|

||||

alias run-vm := run-vm-qcow2

|

||||

|

||||

[private]

|

||||

default:

|

||||

@just --list

|

||||

|

||||

# Check Just Syntax

|

||||

[group('Just')]

|

||||

check:

|

||||

#!/usr/bin/bash

|

||||

find . -type f -name "*.just" | while read -r file; do

|

||||

echo "Checking syntax: $file"

|

||||

just --unstable --fmt --check -f $file

|

||||

done

|

||||

echo "Checking syntax: Justfile"

|

||||

just --unstable --fmt --check -f Justfile

|

||||

|

||||

# Fix Just Syntax

|

||||

[group('Just')]

|

||||

fix:

|

||||

#!/usr/bin/bash

|

||||

find . -type f -name "*.just" | while read -r file; do

|

||||

echo "Checking syntax: $file"

|

||||

just --unstable --fmt -f $file

|

||||

done

|

||||

echo "Checking syntax: Justfile"

|

||||

just --unstable --fmt -f Justfile || { exit 1; }

|

||||

|

||||

# Clean Repo

|

||||

[group('Utility')]

|

||||

clean:

|

||||

#!/usr/bin/bash

|

||||

set -eoux pipefail

|

||||

touch _build

|

||||

find *_build* -exec rm -rf {} \;

|

||||

rm -f previous.manifest.json

|

||||

rm -f changelog.md

|

||||

rm -f output.env

|

||||

rm -f output/

|

||||

|

||||

# Sudo Clean Repo

|

||||

[group('Utility')]

|

||||

[private]

|

||||

sudo-clean:

|

||||

just sudoif just clean

|

||||

|

||||

# sudoif bash function

|

||||

[group('Utility')]

|

||||

[private]

|

||||

sudoif command *args:

|

||||

#!/usr/bin/bash

|

||||

function sudoif(){

|

||||

if [[ "${UID}" -eq 0 ]]; then

|

||||

"$@"

|

||||

elif [[ "$(command -v sudo)" && -n "${SSH_ASKPASS:-}" ]] && [[ -n "${DISPLAY:-}" || -n "${WAYLAND_DISPLAY:-}" ]]; then

|

||||

/usr/bin/sudo --askpass "$@" || exit 1

|

||||

elif [[ "$(command -v sudo)" ]]; then

|

||||

/usr/bin/sudo "$@" || exit 1

|

||||

else

|

||||

exit 1

|

||||

fi

|

||||

}

|

||||

sudoif {{ command }} {{ args }}

|

||||

|

||||

# This Justfile recipe builds a container image using Podman.

|

||||

#

|

||||

# Arguments:

|

||||

# $target_image - The tag you want to apply to the image (default: $image_name).

|

||||

# $tag - The tag for the image (default: $default_tag).

|

||||

#

|

||||

# The script constructs the version string using the tag and the current date.

|

||||

# If the git working directory is clean, it also includes the short SHA of the current HEAD.

|

||||

#

|

||||

# just build $target_image $tag

|

||||

#

|

||||

# Example usage:

|

||||

# just build aurora lts

|

||||

#

|

||||

# This will build an image 'aurora:lts' with DX and GDX enabled.

|

||||

#

|

||||

|

||||

# Build the image using the specified parameters

|

||||

build $target_image=image_name $tag=default_tag:

|

||||

#!/usr/bin/env bash

|

||||

|

||||

BUILD_ARGS=()

|

||||

if [[ -z "$(git status -s)" ]]; then

|

||||

BUILD_ARGS+=("--build-arg" "SHA_HEAD_SHORT=$(git rev-parse --short HEAD)")

|

||||

fi

|

||||

|

||||

podman build \

|

||||

"${BUILD_ARGS[@]}" \

|

||||

--pull=newer \

|

||||

--tag "${target_image}:${tag}" \

|

||||

.

|

||||

|

||||

# Command: _rootful_load_image

|

||||

# Description: This script checks if the current user is root or running under sudo. If not, it attempts to resolve the image tag using podman inspect.

|

||||

# If the image is found, it loads it into rootful podman. If the image is not found, it pulls it from the repository.

|

||||

#

|

||||

# Parameters:

|

||||

# $target_image - The name of the target image to be loaded or pulled.

|

||||

# $tag - The tag of the target image to be loaded or pulled. Default is 'default_tag'.

|

||||

#

|

||||

# Example usage:

|

||||

# _rootful_load_image my_image latest

|

||||

#

|

||||

# Steps:

|

||||

# 1. Check if the script is already running as root or under sudo.

|

||||

# 2. Check if target image is in the non-root podman container storage)

|

||||

# 3. If the image is found, load it into rootful podman using podman scp.

|

||||

# 4. If the image is not found, pull it from the remote repository into reootful podman.

|

||||

|

||||

_rootful_load_image $target_image=image_name $tag=default_tag:

|

||||

#!/usr/bin/bash

|

||||

set -eoux pipefail

|

||||

|

||||

# Check if already running as root or under sudo

|

||||

if [[ -n "${SUDO_USER:-}" || "${UID}" -eq "0" ]]; then

|

||||

echo "Already root or running under sudo, no need to load image from user podman."

|

||||

exit 0

|

||||

fi

|

||||

|

||||

# Try to resolve the image tag using podman inspect

|

||||

set +e

|

||||

resolved_tag=$(podman inspect -t image "${target_image}:${tag}" | jq -r '.[].RepoTags.[0]')

|

||||

return_code=$?

|

||||

set -e

|

||||

|

||||

USER_IMG_ID=$(podman images --filter reference="${target_image}:${tag}" --format "'{{ '{{.ID}}' }}'")

|

||||

|

||||

if [[ $return_code -eq 0 ]]; then

|

||||

# If the image is found, load it into rootful podman

|

||||

ID=$(just sudoif podman images --filter reference="${target_image}:${tag}" --format "'{{ '{{.ID}}' }}'")

|

||||

if [[ "$ID" != "$USER_IMG_ID" ]]; then

|

||||

# If the image ID is not found or different from user, copy the image from user podman to root podman

|

||||

COPYTMP=$(mktemp -p "${PWD}" -d -t _build_podman_scp.XXXXXXXXXX)

|

||||

just sudoif TMPDIR=${COPYTMP} podman image scp ${UID}@localhost::"${target_image}:${tag}" root@localhost::"${target_image}:${tag}"

|

||||

rm -rf "${COPYTMP}"

|

||||

fi

|

||||

else

|

||||

# If the image is not found, pull it from the repository

|

||||

just sudoif podman pull "${target_image}:${tag}"

|

||||

fi

|

||||

|

||||

# Build a bootc bootable image using Bootc Image Builder (BIB)

|

||||

# Converts a container image to a bootable image

|

||||

# Parameters:

|

||||

# target_image: The name of the image to build (ex. localhost/fedora)

|

||||

# tag: The tag of the image to build (ex. latest)

|

||||

# type: The type of image to build (ex. qcow2, raw, iso)

|

||||

# config: The configuration file to use for the build (default: disk_config/disk.toml)

|

||||

|

||||

# Example: just _rebuild-bib localhost/fedora latest qcow2 disk_config/disk.toml

|

||||

_build-bib $target_image $tag $type $config: (_rootful_load_image target_image tag)

|

||||

#!/usr/bin/env bash

|

||||

set -euo pipefail

|

||||

|

||||

args="--type ${type} "

|

||||

args+="--use-librepo=True "

|

||||

args+="--rootfs=btrfs"

|

||||

|

||||

BUILDTMP=$(mktemp -p "${PWD}" -d -t _build-bib.XXXXXXXXXX)

|

||||

|

||||

sudo podman run \

|

||||

--rm \

|

||||

-it \

|

||||

--privileged \

|

||||

--pull=newer \

|

||||

--net=host \

|

||||

--security-opt label=type:unconfined_t \

|

||||

-v $(pwd)/${config}:/config.toml:ro \

|

||||

-v $BUILDTMP:/output \

|

||||

-v /var/lib/containers/storage:/var/lib/containers/storage \

|

||||

"${bib_image}" \

|

||||

${args} \

|

||||

"${target_image}:${tag}"

|

||||

|

||||

mkdir -p output

|

||||

sudo mv -f $BUILDTMP/* output/

|

||||

sudo rmdir $BUILDTMP

|

||||

sudo chown -R $USER:$USER output/

|

||||

|

||||

# Podman builds the image from the Containerfile and creates a bootable image

|

||||

# Parameters:

|

||||

# target_image: The name of the image to build (ex. localhost/fedora)

|

||||

# tag: The tag of the image to build (ex. latest)

|

||||

# type: The type of image to build (ex. qcow2, raw, iso)

|

||||

# config: The configuration file to use for the build (deafult: disk_config/disk.toml)

|

||||

|

||||

# Example: just _rebuild-bib localhost/fedora latest qcow2 disk_config/disk.toml

|

||||

_rebuild-bib $target_image $tag $type $config: (build target_image tag) && (_build-bib target_image tag type config)

|

||||

|

||||

# Build a QCOW2 virtual machine image

|

||||

[group('Build Virtal Machine Image')]

|

||||

build-qcow2 $target_image=("localhost/" + image_name) $tag=default_tag: && (_build-bib target_image tag "qcow2" "disk_config/disk.toml")

|

||||

|

||||

# Build a RAW virtual machine image

|

||||

[group('Build Virtal Machine Image')]

|

||||

build-raw $target_image=("localhost/" + image_name) $tag=default_tag: && (_build-bib target_image tag "raw" "disk_config/disk.toml")

|

||||

|

||||

# Build an ISO virtual machine image

|

||||

[group('Build Virtal Machine Image')]

|

||||

build-iso $target_image=("localhost/" + image_name) $tag=default_tag: && (_build-bib target_image tag "iso" "disk_config/iso.toml")

|

||||

|

||||

# Rebuild a QCOW2 virtual machine image

|

||||

[group('Build Virtal Machine Image')]

|

||||

rebuild-qcow2 $target_image=("localhost/" + image_name) $tag=default_tag: && (_rebuild-bib target_image tag "qcow2" "disk_config/disk.toml")

|

||||

|

||||

# Rebuild a RAW virtual machine image

|

||||

[group('Build Virtal Machine Image')]

|

||||

rebuild-raw $target_image=("localhost/" + image_name) $tag=default_tag: && (_rebuild-bib target_image tag "raw" "disk_config/disk.toml")

|

||||

|

||||

# Rebuild an ISO virtual machine image

|

||||

[group('Build Virtal Machine Image')]

|

||||

rebuild-iso $target_image=("localhost/" + image_name) $tag=default_tag: && (_rebuild-bib target_image tag "iso" "disk_config/iso.toml")

|

||||

|

||||

# Run a virtual machine with the specified image type and configuration

|

||||

_run-vm $target_image $tag $type $config:

|

||||

#!/usr/bin/bash

|

||||

set -eoux pipefail

|

||||

|

||||

# Determine the image file based on the type

|

||||

image_file="output/${type}/disk.${type}"

|

||||

if [[ $type == iso ]]; then

|

||||

image_file="output/bootiso/install.iso"

|

||||

fi

|

||||

|

||||

# Build the image if it does not exist

|

||||

if [[ ! -f "${image_file}" ]]; then

|

||||

just "build-${type}" "$target_image" "$tag"

|

||||

fi

|

||||

|

||||

# Determine an available port to use

|

||||

port=8006

|

||||

while grep -q :${port} <<< $(ss -tunalp); do

|

||||

port=$(( port + 1 ))

|

||||

done

|

||||

echo "Using Port: ${port}"

|

||||

echo "Connect to http://localhost:${port}"

|

||||

|

||||

# Set up the arguments for running the VM

|

||||

run_args=()

|

||||

run_args+=(--rm --privileged)

|

||||

run_args+=(--pull=newer)

|

||||

run_args+=(--publish "127.0.0.1:${port}:8006")

|

||||

run_args+=(--env "CPU_CORES=4")

|

||||

run_args+=(--env "RAM_SIZE=8G")

|

||||

run_args+=(--env "DISK_SIZE=64G")

|

||||

run_args+=(--env "TPM=Y")

|

||||

run_args+=(--env "GPU=Y")

|

||||

run_args+=(--device=/dev/kvm)

|

||||

run_args+=(--volume "${PWD}/${image_file}":"/boot.${type}")

|

||||

run_args+=(docker.io/qemux/qemu)

|

||||

|

||||

# Run the VM and open the browser to connect

|

||||

(sleep 30 && xdg-open http://localhost:"$port") &

|

||||

podman run "${run_args[@]}"

|

||||

|

||||

# Run a virtual machine from a QCOW2 image

|

||||

[group('Run Virtal Machine')]

|

||||

run-vm-qcow2 $target_image=("localhost/" + image_name) $tag=default_tag: && (_run-vm target_image tag "qcow2" "disk_config/disk.toml")

|

||||

|

||||

# Run a virtual machine from a RAW image

|

||||

[group('Run Virtal Machine')]

|

||||

run-vm-raw $target_image=("localhost/" + image_name) $tag=default_tag: && (_run-vm target_image tag "raw" "disk_config/disk.toml")

|

||||

|

||||

# Run a virtual machine from an ISO

|

||||

[group('Run Virtal Machine')]

|

||||

run-vm-iso $target_image=("localhost/" + image_name) $tag=default_tag: && (_run-vm target_image tag "iso" "disk_config/iso.toml")

|

||||

|

||||

# Run a virtual machine using systemd-vmspawn

|

||||

[group('Run Virtal Machine')]

|

||||

spawn-vm rebuild="0" type="qcow2" ram="6G":

|

||||

#!/usr/bin/env bash

|

||||

|

||||

set -euo pipefail

|

||||

|

||||

[ "{{ rebuild }}" -eq 1 ] && echo "Rebuilding the ISO" && just build-vm {{ rebuild }} {{ type }}

|

||||

|

||||

systemd-vmspawn \

|

||||

-M "bootc-image" \

|

||||

--console=gui \

|

||||

--cpus=2 \

|

||||

--ram=$(echo {{ ram }}| /usr/bin/numfmt --from=iec) \

|

||||

--network-user-mode \

|

||||

--vsock=false --pass-ssh-key=false \

|

||||

-i ./output/**/*.{{ type }}

|

||||

|

||||

|

||||

# Runs shell check on all Bash scripts

|

||||

lint:

|

||||

#!/usr/bin/env bash

|

||||

set -eoux pipefail

|

||||

# Check if shellcheck is installed

|

||||

if ! command -v shellcheck &> /dev/null; then

|

||||

echo "shellcheck could not be found. Please install it."

|

||||

exit 1

|

||||

fi

|

||||

# Run shellcheck on all Bash scripts

|

||||

/usr/bin/find . -iname "*.sh" -type f -exec shellcheck "{}" ';'

|

||||

|

||||

# Runs shfmt on all Bash scripts

|

||||

format:

|

||||

#!/usr/bin/env bash

|

||||

set -eoux pipefail

|

||||

# Check if shfmt is installed

|

||||

if ! command -v shfmt &> /dev/null; then

|

||||

echo "shellcheck could not be found. Please install it."

|

||||

exit 1

|

||||

fi

|

||||

# Run shfmt on all Bash scripts

|

||||

/usr/bin/find . -iname "*.sh" -type f -exec shfmt --write "{}" ';'

|

||||

2

LICENSE

2

LICENSE

@@ -186,7 +186,7 @@

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright [yyyy] [name of copyright owner]

|

||||

Copyright [2025] [ManInDark]

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

|

||||

15

Makefile

Normal file

15

Makefile

Normal file

@@ -0,0 +1,15 @@

|

||||

clean:

|

||||

sudo rm -fr flathub output

|

||||

sudo podman system prune

|

||||

|

||||

oci:

|

||||

sudo podman build --network=host -t podman-image .

|

||||

|

||||

CONTAINER_IMAGE=localhost/podman-image

|

||||

FILESYSTEM_TYPE=ext4

|

||||

qcow:

|

||||

mkdir -p output

|

||||

sudo podman run --rm -it --privileged --pull=newer --security-opt label=type:unconfined_t -v ./disk_config/user.toml:/config.toml:ro -v ./output:/output -v /var/lib/containers/storage:/var/lib/containers/storage quay.io/centos-bootc/bootc-image-builder:latest --type=qcow2 --rootfs $(FILESYSTEM_TYPE) $(CONTAINER_IMAGE)

|

||||

|

||||

run:

|

||||

qemu-system-x86_64 -k de -L /usr/share/qemu/keymaps -M accel=kvm -cpu host -smp 2 -m 4096 -serial stdio -snapshot output/qcow2/disk.qcow2

|

||||

275

README.md

275

README.md

@@ -1,264 +1,23 @@

|

||||

# image-template

|

||||

# custom bootc image

|

||||

|

||||

This repository is meant to be a template for building your own custom [bootc](https://github.com/bootc-dev/bootc) image. This template is the recommended way to make customizations to any image published by the Universal Blue Project.

|

||||

This repository contains all information necessary to build my very own customized bootc image.

|

||||

|

||||

# Community

|

||||

I've attached various links I used to learn about how all of this works below, they are in no particular order.

|

||||

|

||||

If you have questions about this template after following the instructions, try the following spaces:

|

||||

- [Universal Blue Forums](https://universal-blue.discourse.group/)

|

||||

- [Universal Blue Discord](https://discord.gg/WEu6BdFEtp)

|

||||

- [bootc discussion forums](https://github.com/bootc-dev/bootc/discussions) - This is not an Universal Blue managed space, but is an excellent resource if you run into issues with building bootc images.

|

||||

## Build

|

||||

|

||||

# How to Use

|

||||

A qcow image may be built by first calling `make oci` to build the oci image and then `make qcow` to turn it into a bootable qcow image.

|

||||

|

||||

To get started on your first bootc image, simply read and follow the steps in the next few headings.

|

||||

If you prefer instructions in video form, TesterTech created an excellent tutorial, embedded below.

|

||||

## Links

|

||||

|

||||

[](https://www.youtube.com/watch?v=IxBl11Zmq5wE)

|

||||

|

||||

## Step 0: Prerequisites

|

||||

|

||||

These steps assume you have the following:

|

||||

- A Github Account

|

||||

- A machine running a bootc image (e.g. Bazzite, Bluefin, Aurora, or Fedora Atomic)

|

||||

- Experience installing and using CLI programs

|

||||

|

||||

## Step 1: Preparing the Template

|

||||

|

||||

### Step 1a: Copying the Template

|

||||

|

||||

Select `Use this Template` on this page. You can set the name and description of your repository to whatever you would like, but all other settings should be left untouched.

|

||||

|

||||

Once you have finished copying the template, you need to enable the Github Actions workflows for your new repository.

|

||||

To enable the workflows, go to the `Actions` tab of the new repository and click the button to enable workflows.

|

||||

|

||||

### Step 1b: Cloning the New Repository

|

||||

|

||||

Here I will defer to the much superior GitHub documentation on the matter. You can use whichever method is easiest.

|

||||

[GitHub Documentation](https://docs.github.com/en/repositories/creating-and-managing-repositories/cloning-a-repository)

|

||||

|

||||

Once you have the repository on your local drive, proceed to the next step.

|

||||

|

||||

## Step 2: Initial Setup

|

||||

|

||||

### Step 2a: Creating a Cosign Key

|

||||

|

||||

Container signing is important for end-user security and is enabled on all Universal Blue images. By default the image builds *will fail* if you don't.

|

||||

|

||||

First, install the [cosign CLI tool](https://edu.chainguard.dev/open-source/sigstore/cosign/how-to-install-cosign/#installing-cosign-with-the-cosign-binary)

|

||||

With the cosign tool installed, run inside your repo folder:

|

||||

|

||||

```bash

|

||||

COSIGN_PASSWORD="" cosign generate-key-pair

|

||||

```

|

||||

|

||||

The signing key will be used in GitHub Actions and will not work if it is password protected.

|

||||

|

||||

> [!WARNING]

|

||||

> Be careful to *never* accidentally commit `cosign.key` into your git repo. If this key goes out to the public, the security of your repository is compromised.

|

||||

|

||||

Next, you need to add the key to GitHub. This makes use of GitHub's secret signing system.

|

||||

|

||||

<details>

|

||||

<summary>Using the Github Web Interface (preferred)</summary>

|

||||

|

||||

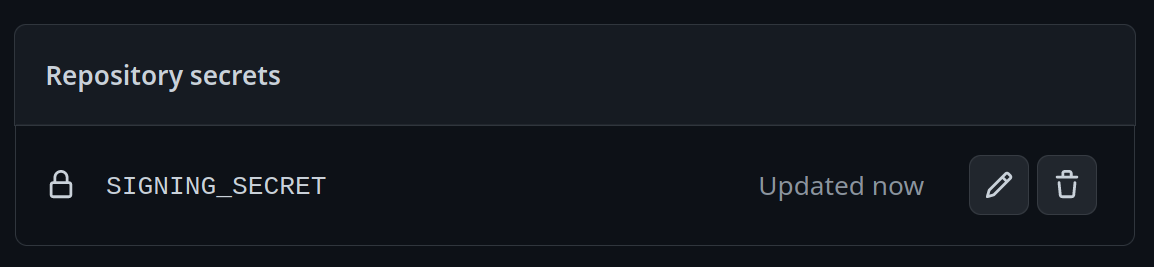

Go to your repository settings, under `Secrets and Variables` -> `Actions`

|

||||

|

||||

Add a new secret and name it `SIGNING_SECRET`, then paste the contents of `cosign.key` into the secret and save it. Make sure it's the .key file and not the .pub file. Once done, it should look like this:

|

||||

|

||||

</details>

|

||||

<details>

|

||||

<summary>Using the Github CLI</summary>

|

||||

|

||||

If you have the `github-cli` installed, run:

|

||||

|

||||

```bash

|

||||

gh secret set SIGNING_SECRET < cosign.key

|

||||

```

|

||||

</details>

|

||||

|

||||

### Step 2b: Choosing Your Base Image

|

||||

|

||||

To choose a base image, simply modify the line in the container file starting with `FROM`. This will be the image your image derives from, and is your starting point for modifications.

|

||||

For a base image, you can choose any of the Universal Blue images or start from a Fedora Atomic system. Below this paragraph is a dropdown with a non-exhaustive list of potential base images.

|

||||

|

||||

<details>

|

||||

<summary>Base Images</summary>

|

||||

|

||||

- Bazzite: `ghcr.io/ublue-os/bazzite:stable`

|

||||

- Aurora: `ghcr.io/ublue-os/aurora:stable`

|

||||

- Bluefin: `ghcr.io/ublue-os/bluefin:stable`

|

||||

- Universal Blue Base: `ghcr.io/ublue-os/base-main:latest`

|

||||

- Fedora: `quay.io/fedora/fedora-bootc:42`

|

||||

|

||||

You can find more Universal Blue images on the [packages page](https://github.com/orgs/ublue-os/packages).

|

||||

</details>

|

||||

|

||||

If you don't know which image to pick, choosing the one your system is currently on is the best bet for a smooth transition. To find out what image your system currently uses, run the following command:

|

||||

```bash

|

||||

sudo bootc status

|

||||

```

|

||||

This will show you all the info you need to know about your current image. The image you are currently on is displayed after `Booted image:`. Paste that information after the `FROM` statement in the Containerfile to set it as your base image.

|

||||

|

||||

### Step 2c: Changing Names

|

||||

|

||||

Change the first line in the [Justfile](./Justfile) to your image's name.

|

||||

|

||||

To commit and push all the files changed and added in step 2 into your Github repository:

|

||||

```bash

|

||||

git add Containerfile Justfile cosign.pub

|

||||

git commit -m "Initial Setup"

|

||||

git push

|

||||

```

|

||||

Once pushed, go look at the Actions tab on your Github repository's page. The green checkmark should be showing on the top commit, which means your new image is ready!

|

||||

|

||||

## Step 3: Switch to Your Image

|

||||

|

||||

From your bootc system, run the following command substituting in your Github username and image name where noted.

|

||||

```bash

|

||||

sudo bootc switch ghcr.io/<username>/<image_name>

|

||||

```

|

||||

This should queue your image for the next reboot, which you can do immediately after the command finishes. You have officially set up your custom image! See the following section for an explanation of the important parts of the template for customization.

|

||||

|

||||

# Repository Contents

|

||||

|

||||

## Containerfile

|

||||

|

||||

The [Containerfile](./Containerfile) defines the operations used to customize the selected image.This file is the entrypoint for your image build, and works exactly like a regular podman Containerfile. For reference, please see the [Podman Documentation](https://docs.podman.io/en/latest/Introduction.html).

|

||||

|

||||

## build.sh

|

||||

|

||||

The [build.sh](./build_files/build.sh) file is called from your Containerfile. It is the best place to install new packages or make any other customization to your system. There are customization examples contained within it for your perusal.

|

||||

|

||||

## build.yml

|

||||

|

||||

The [build.yml](./.github/workflows/build.yml) Github Actions workflow creates your custom OCI image and publishes it to the Github Container Registry (GHCR). By default, the image name will match the Github repository name. There are several environment variables at the start of the workflow which may be of interest to change.

|

||||

|

||||

# Building Disk Images

|

||||

|

||||

This template provides an out of the box workflow for creating disk images (ISO, qcow, raw) for your custom OCI image which can be used to directly install onto your machines.

|

||||

|

||||

This template provides a way to upload the disk images that is generated from the workflow to a S3 bucket. The disk images will also be available as an artifact from the job, if you wish to use an alternate provider. To upload to S3 we use [rclone](https://rclone.org/) which is able to use [many S3 providers](https://rclone.org/s3/).

|

||||

|

||||

## Setting Up ISO Builds

|

||||

|

||||

The [build-disk.yml](./.github/workflows/build-disk.yml) Github Actions workflow creates a disk image from your OCI image by utilizing the [bootc-image-builder](https://osbuild.org/docs/bootc/). In order to use this workflow you must complete the following steps:

|

||||

|

||||

1. Modify `disk_config/iso.toml` to point to your custom container image before generating an ISO image.

|

||||

2. If you changed your image name from the default in `build.yml` then in the `build-disk.yml` file edit the `IMAGE_REGISTRY`, `IMAGE_NAME` and `DEFAULT_TAG` environment variables with the correct values. If you did not make changes, skip this step.

|

||||

3. Finally, if you want to upload your disk images to S3 then you will need to add your S3 configuration to the repository's Action secrets. This can be found by going to your repository settings, under `Secrets and Variables` -> `Actions`. You will need to add the following

|

||||

- `S3_PROVIDER` - Must match one of the values from the [supported list](https://rclone.org/s3/)

|

||||

- `S3_BUCKET_NAME` - Your unique bucket name

|

||||

- `S3_ACCESS_KEY_ID` - It is recommended that you make a separate key just for this workflow

|

||||

- `S3_SECRET_ACCESS_KEY` - See above.

|

||||

- `S3_REGION` - The region your bucket lives in. If you do not know then set this value to `auto`.

|

||||

- `S3_ENDPOINT` - This value will be specific to the bucket as well.

|

||||

|

||||

Once the workflow is done, you'll find the disk images either in your S3 bucket or as part of the summary under `Artifacts` after the workflow is completed.

|

||||

|

||||

# Artifacthub

|

||||

|

||||

This template comes with the necessary tooling to index your image on [artifacthub.io](https://artifacthub.io). Use the `artifacthub-repo.yml` file at the root to verify yourself as the publisher. This is important to you for a few reasons:

|

||||

|

||||

- The value of artifacthub is it's one place for people to index their custom images, and since we depend on each other to learn, it helps grow the community.

|

||||

- You get to see your pet project listed with the other cool projects in Cloud Native.

|

||||

- Since the site puts your README front and center, it's a good way to learn how to write a good README, learn some marketing, finding your audience, etc.

|

||||

|

||||

[Discussion Thread](https://universal-blue.discourse.group/t/listing-your-custom-image-on-artifacthub/6446)

|

||||

|

||||

# Justfile Documentation

|

||||

|

||||

The `Justfile` contains various commands and configurations for building and managing container images and virtual machine images using Podman and other utilities.

|

||||

To use it, you must have installed [just](https://just.systems/man/en/introduction.html) from your package manager or manually. It is available by default on all Universal Blue images.

|

||||

|

||||

## Environment Variables

|

||||

|

||||

- `image_name`: The name of the image (default: "image-template").

|

||||

- `default_tag`: The default tag for the image (default: "latest").

|

||||

- `bib_image`: The Bootc Image Builder (BIB) image (default: "quay.io/centos-bootc/bootc-image-builder:latest").

|

||||

|

||||

## Building The Image

|

||||

|

||||

### `just build`

|

||||

|

||||

Builds a container image using Podman.

|

||||

|

||||

```bash

|

||||

just build $target_image $tag

|

||||

```

|

||||

|

||||

Arguments:

|

||||

- `$target_image`: The tag you want to apply to the image (default: `$image_name`).

|

||||

- `$tag`: The tag for the image (default: `$default_tag`).

|

||||

|

||||

## Building and Running Virtual Machines and ISOs

|

||||

|

||||

The below commands all build QCOW2 images. To produce or use a different type of image, substitute in the command with that type in the place of `qcow2`. The available types are `qcow2`, `iso`, and `raw`.

|

||||

|

||||

### `just build-qcow2`

|

||||

|

||||

Builds a QCOW2 virtual machine image.

|

||||

|

||||

```bash

|

||||

just build-qcow2 $target_image $tag

|

||||

```

|

||||

|

||||

### `just rebuild-qcow2`

|

||||

|

||||

Rebuilds a QCOW2 virtual machine image.

|

||||

|

||||

```bash

|

||||

just rebuild-vm $target_image $tag

|

||||

```

|

||||

|

||||

### `just run-vm-qcow2`

|

||||

|

||||

Runs a virtual machine from a QCOW2 image.

|

||||

|

||||

```bash

|

||||

just run-vm-qcow2 $target_image $tag

|

||||

```

|

||||

|

||||

### `just spawn-vm`

|

||||

|

||||

Runs a virtual machine using systemd-vmspawn.

|

||||

|

||||

```bash

|

||||

just spawn-vm rebuild="0" type="qcow2" ram="6G"

|

||||

```

|

||||

|

||||

## File Management

|

||||

|

||||

### `just check`

|

||||

|

||||

Checks the syntax of all `.just` files and the `Justfile`.

|

||||

|

||||

### `just fix`

|

||||

|

||||

Fixes the syntax of all `.just` files and the `Justfile`.

|

||||

|

||||

### `just clean`

|

||||

|

||||

Cleans the repository by removing build artifacts.

|

||||

|

||||

### `just lint`

|

||||

|

||||

Runs shell check on all Bash scripts.

|

||||

|

||||

### `just format`

|

||||

|

||||

Runs shfmt on all Bash scripts.

|

||||

|

||||

## Additional resources

|

||||

|

||||

For additional driver support, ublue maintains a set of scripts and container images available at [ublue-akmod](https://github.com/ublue-os/akmods). These images include the necessary scripts to install multiple kernel drivers within the container (Nvidia, OpenRazer, Framework...). The documentation provides guidance on how to properly integrate these drivers into your container image.

|

||||

|

||||

## Community Examples

|

||||

|

||||

These are images derived from this template (or similar enough to this template). Reference them when building your image!

|

||||

|

||||

- [m2Giles' OS](https://github.com/m2giles/m2os)

|

||||

- [bOS](https://github.com/bsherman/bos)

|

||||

- [Homer](https://github.com/bketelsen/homer/)

|

||||

- [Amy OS](https://github.com/astrovm/amyos)

|

||||

- [VeneOS](https://github.com/Venefilyn/veneos)

|

||||

- (getting started)[https://docs.fedoraproject.org/en-US/bootc/building-containers]

|

||||

- (Fedora Silverblue)[https://fedoraproject.org/atomic-desktops/silverblue/download] is a fully prepared gnome desktop distribution.

|

||||

- (cicd-bootc)[https://github.com/nzwulfin/cicd-bootc] example repository with github action workflow for building images

|

||||

- (universal-blue)[https://universal-blue.org] prebuilt image provider (Aurora, Bazzite, Bluefin, uCore)

|

||||

- (image building guidance)[https://bootc-dev.github.io/bootc/building/guidance.html]

|

||||

- (authentication in images)[https://docs.fedoraproject.org/en-US/bootc/authentication]

|

||||

- (provisioning with qemu and libvirt)[https://docs.fedoraproject.org/en-US/bootc/qemu-and-libvirt]

|

||||

- (fedora base images)[https://docs.fedoraproject.org/en-US/bootc/base-images]

|

||||

- (bootc image builder)[https://github.com/osbuild/bootc-image-builder]

|

||||

- (building bootc images from scratch)[https://docs.fedoraproject.org/en-US/bootc/building-from-scratch]

|

||||

- (building derived images)[https://docs.fedoraproject.org/en-US/bootc/building-containers]

|

||||

|

||||

@@ -1,8 +0,0 @@

|

||||

# This file is completely optional, but if you want to index your image on https://artifacthub.io/ you can

|

||||

# Sign up and add the Repository ID to the right field. Owners fields are optional.

|

||||

# Examples: https://artifacthub.io/packages/search?ts_query_web=ublue&sort=relevance&page=1

|

||||

|

||||

repositoryID: my-custom-id-here # Fill in with your own credentials

|

||||

owners: # (optional, used to claim repository ownership)

|

||||

- name: My Name

|

||||

email: my_email@email.com

|

||||

@@ -2,23 +2,11 @@

|

||||

|

||||

set -ouex pipefail

|

||||

|

||||

### Install packages

|

||||

|

||||

# Packages can be installed from any enabled yum repo on the image.

|

||||

# RPMfusion repos are available by default in ublue main images

|

||||

# List of rpmfusion packages can be found here:

|

||||

# https://mirrors.rpmfusion.org/mirrorlist?path=free/fedora/updates/39/x86_64/repoview/index.html&protocol=https&redirect=1

|

||||

|

||||

# this installs a package from fedora repos

|

||||

dnf5 install -y tmux

|

||||

|

||||

# Use a COPR Example:

|

||||

#

|

||||

# dnf5 -y copr enable ublue-os/staging

|

||||

# dnf5 -y install package

|

||||

# Disable COPRs so they don't end up enabled on the final image:

|

||||

# dnf5 -y copr disable ublue-os/staging

|

||||

|

||||

#### Example for enabling a System Unit File

|

||||

|

||||

systemctl enable podman.socket

|

||||

dnf5 install -y glibc-langpack-en glibc-langpack-de

|

||||

dnf5 install -y --setopt=exclude=gnome-tour,malcontent-control gnome-shell gnome-keyring gnome-keyring-pam gnome-bluetooth alacritty

|

||||

dnf5 install -y gnome-calculator gnome-disk-utility gnome-backgrounds

|

||||

dnf5 install -y curl git btop tmux flatpak

|

||||

flatpak remote-add --if-not-exists flathub https://dl.flathub.org/repo/flathub.flatpakrepo

|

||||

echo "LANG=de_DE.UTF-8" >> /etc/default/locale

|

||||

dnf update -y

|

||||

dnf clean all

|

||||

|

||||

8

disk_config/user.toml

Normal file

8

disk_config/user.toml

Normal file

@@ -0,0 +1,8 @@

|

||||

[[customizations.user]]

|

||||

name = "testuser"

|

||||

password = "test"

|

||||

groups = ["wheel"]

|

||||

|

||||

[[customizations.filesystem]]

|

||||

mountpoint = "/"

|

||||

minsize = "10 GiB"

|

||||

Reference in New Issue

Block a user